Alessandro Ragano

Nominated Award:

Best Application of AI in a Student Project

Website of Company (or Linkedin profile of Person):

https://qxlab.ucd.ie/index.php/people/

With over one thousand students on our Dublin campus, the UCD School of Computer Science is the largest computer science department in Ireland. This work was a collaboration between the Science Foundation Ireland Insight Centre for Data Analytics at UCD and the Alan Turing Institute. Insight is one of the largest data analytics research centres in Europe. It seeks to derive value from Big Data and provides innovative technology solutions for industry and society by enabling better decision-making. The Alan Turing Institute, headquartered in the British Library, London with a mission to make great leaps in data science and artificial intelligence research in order to change the world for the better. As part of Alessandro’s PhD he spent 9 months hosted in the Alan Turing Institute and as a visiting researcher at Queen Mary University of London.

Reason for Nomination:

Since the invention of recording technology, numerous audio formats have originated and have allowed people to record sound. Organisations have started to collect records for purposes of collective memory, preserving cultural heritage and providing access for academic research.

To increase accessibility and preserve materials, archivists have begun to digitise sound recordings such as music. However, the original content can be easily altered through the digitisation process as it is a delicate operation. In addition to digitisation restoration techniques can improve the audibility of archived sound to provide a modern listening experience.

Assessing the quality of the above operations is fundamental to evaluating sound archive practices. During the digitisation process, human error can impact the final result and digital restoration can introduce new distortions.

To improve sound archive usability (e.g searching, selecting documents), organisations provide metadata that can be used to retrieve documents. Metadata might include useful information such as the composer, notes about the event, the genre, etc. However, other kinds of metadata are missing. Indeed, information about the recording quality is rarely found and is mostly labelled with very broad attributes, which do not allow users to find items efficiently. For instance, web archives of classical music might include multiple recordings of the same composition with different sound quality. Having quality labels in the metadata can help users improve their usage of the archive.

Quality assessment of sound archives has not been addressed from the perspective of archive users. This is a totally new field: while data driven models exist for applications like measuring speech quality on telephone or Zoom calls, the challenge is much more difficult for sound archives as you do not have a copy of what the original recording was meant to sound like. If there are scratches on the recording what are they masking? This project achieved 4 major objectives to improve sound archive quality and help to develop AI based tools to make our audio cultural heritage more accessible:

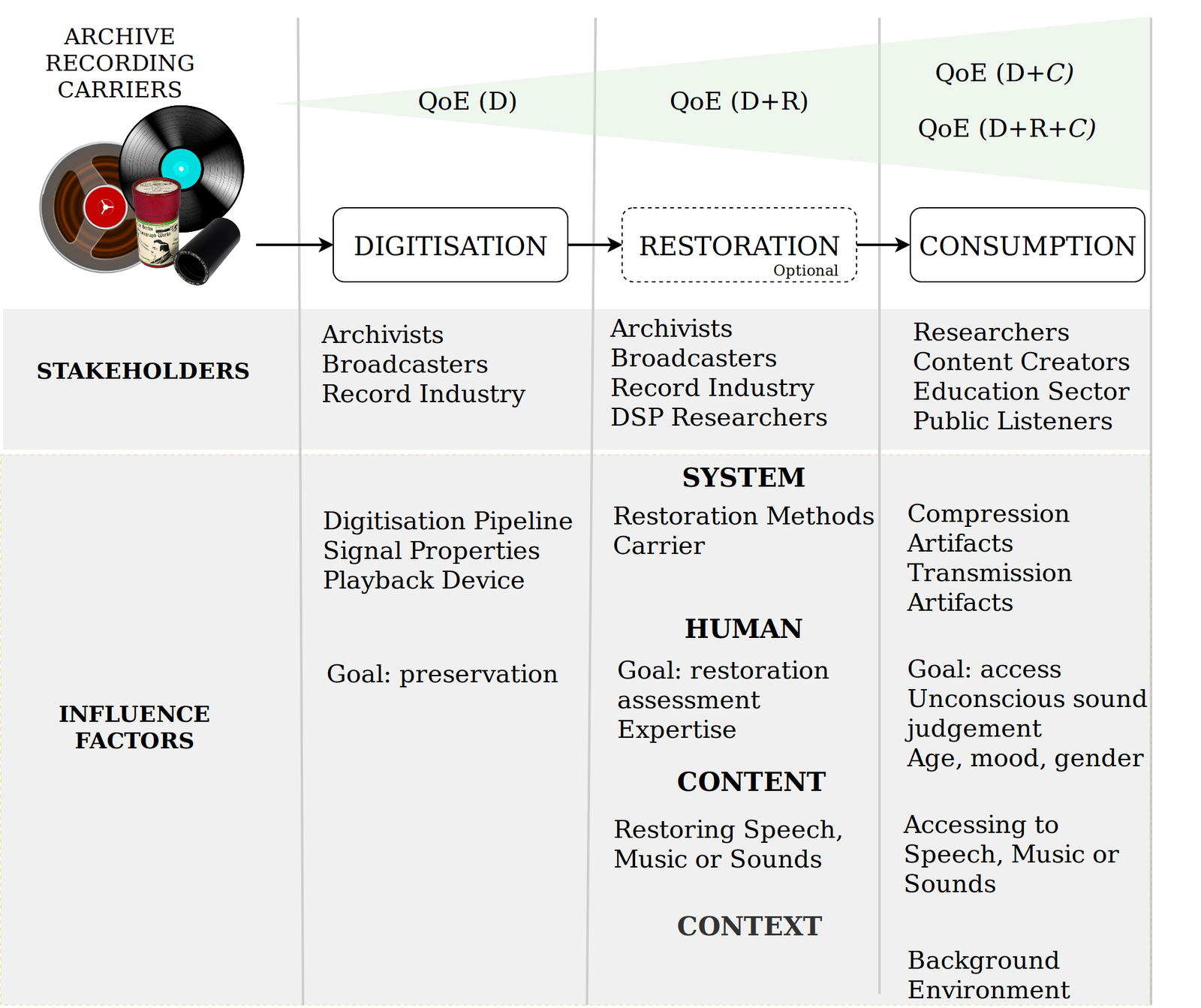

* Develop a roadmap of what quality means to the different stakeholders in sound archives for digitisation, restoration, and consumption.

* Explore the use of data for training models using real world data or synthetic data (e.g. simulated vinyl records scratches and hiss).

* Understand how best to label training and validation data for quality evaluation.

* Develop, adapt and evaluate a range of deep learning models using novel datasets and features to predict the quality of music stored sound archives.

As a musician and a computer scientist this project brought together two passions, music and computer science. It was an opportunity to work with researchers in UCD’s Insight Centre for Data Analytics and Queen Mary University of London where there is expertise in machine learning and in music signal processing respectively. Spending time in the Alan Turing Institute at the British Library also gave the opportunity to discuss the issues and challenges with archivists first hand. The project also gave me the opportunity to work with researchers in Google and co-author a paper with them on machine learning quality metrics.

Additional Information:

This project has resulted in a number of peer reviewed journal and international conference papers.

A Ragano, E Benetos, and A Hines. Automatic Quality Assessment of Digitized and Restored Sound Archives. Journal of the Audio Engineering Society, 70, no. 4, pp.252-270, 2022.

A Ragano, E Benetos, and A Hines. More for Less: Non-Intrusive Speech Quality Assessment with Limited Annotations. 13th International Conference on Quality of Multimedia Experience (QoMEX), IEEE, pp 103-108, 2021.

A Ragano, E Benetos, and A Hines. Development of a Speech Quality Database under Uncontrolled Conditions. Proceedings of the Annual Conference of the International Speech Communication Association (Interspeech), pp.4616-420, 2020.

A Ragano, E Benetos, and A Hines. Audio Impairment Recognition using a Correlation-based Feature Representation. 12th International Conference on Quality of Multimedia Experience (QoMEX), IEEE, 2020.

A Ragano, E Benetos, and A Hines. Adapting the Quality of Experience Framework for Audio Archives. 11th International Conference on Quality of Multimedia Experience (QoMEX), IEEE, 2019.

HB Martinez, A Ragano, and A Hines. Exploring the influence of fine-tuning data on wav2vec 2.0 model for blind speech quality prediction. Proceedings of the Annual Conference of the International Speech Communication Association (Interspeech), 2022.

M Chinen, J Skoglund, CKA Reddy, A Ragano and A Hines. Using Rater and System Metadata to Explain Variance in the VoiceMOS Challenge 2022 Dataset. Proceedings of the Annual Conference of the International Speech Communication Association (Interspeech), 2022.

E Benetos, A Ragano, D Sgroi, and A Tuckwell. Measuring national mood with music: using machine learning to construct a measure of national valence from audio data. Behaviour Research Methods, 2022.

The datasets, models and metadata will be shared to promote further research in this area after publication of the PhD thesis, which will be defended later in 2022.