Bank of Ireland

Nominated Award:

Best Application of AI in a Large Enterprise

Website of Company:

https://www.bankofireland.com/

The Bank of Ireland Group, established by Royal Charter in 1783, is a diversified Financial Services Group. We are committed to supporting our customers brilliantly by providing a relationship-driven Retail and Commercial banking experience. Our ambition is to be the National Champion Bank in Ireland, with UK and selective international diversification. We have four key values which empower colleagues to fulfil our purpose of “Enabling our customers, colleagues and communities to thrive”. These are: being Customer Focused, Agile, Accountable, and working as One Team.

Bank of Ireland is currently undergoing a major transformation to become the leading Digital Relationship Bank (DRB). The DRB project will facilitate the delivery of an enhanced, more personalised banking experience for customers across multiple channels, while also retaining the personal relationship with customers which is a crucial component of the traditional banking model.

The Data Science team sits within the Customer Analytics and Transformation department of the Retail Marketing function and supports our customers and colleagues across Ireland and UK. The team is leading the charge to enable and deliver personalised experience through data analytics and the application of machine learning and artificial intelligence. In a transforming competitive market environment, customer expectations continue to grow and there is an increasing demand for personalised customer experiences. To deliver on this vision, the team leverage internal and external data to build state-of-the-art machine learning models that drive the growth and retention marketing activities of the Bank. The team also leverages on data analytics and knowledge discovery and visualisation techniques for generating heuristics and business rules that are combined with model driven decisions to maximise the value and deliver positive outcomes for customers and business stakeholders.

Building upon the years of expertise developed in optimising the customer experience and interactions, the Data Science team have engaged with other areas of the Bank Of Ireland Group to leverage the machine learning and artificial intelligence knowledge and skills aiming to make both evolutionary and revolutionary improvement to how the group operates and enable our colleagues to thrive. Amongst many other projects delivering improved efficiency and successful customer outcomes in arrears management, financial wellbeing and customer service management.

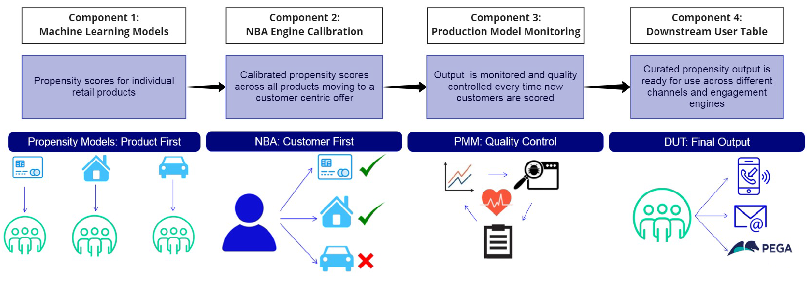

A notable recent milestone has been the implementation of the Downstream User Table, a capstone project that completes the maturity journey of a cohort of machine learning models implemented for customer propensity modelling. Individual propensity scores for different retail banking products are calibrated using a next-best-action ranking engine that allows for fair comparison between different products, enabling the customer to receive the most appropriate proposition. The output is subsequently monitored by an automated framework that flags potential concerns and quarantines the output if it does not meet the criteria. Lastly, a final component filters the selected models between the ones that have passed the quality criteria leaving only the best model for a particular application and business ready to be used. This is an example of achieving the highest maturity stage on a production ready application of machine learning pipelines for propensity modelling.

Reason for Nomination:

When deploying machine learning models on a production environment questions arise regarding the interpretation of the comparison of probabilities across different models and the monitoring of the output: how to compare across different models for a variety of retail banking products very different in nature, or in other words, if a customer has propensity both for a first time buyer mortgage and a car loan which offer should be prioritised? How to ascertain that the output shown by different models remains accurate and representative of the customer behaviour after some time has passed since deployment? How to offer a single view of validated, accurate and ready to use output for downstream users for a variety of purposes: marketing campaigns, insights, customer engagement.

The Downstream User Table project is designed to provide an answer to all these questions while doing it in an automated fashion with minimal human supervision through configuration files that set the parameters and behaviours of the different components in the architecture. DUT has a modular architecture that takes raw propensity output from different models as input and offers a calibrated, quality controlled and ready to use output for business application. The project has been delivered in sequential stages during the last two years and is the capstone component of an architecture that takes state-of-the-art machine learning model output, calibrates the probabilities, monitors and prepares a business ready application all in an automated way. The business application of these leads ranges from direct marketing campaigns across traditional channels (phone, email) to the use in online and digital channels.

The first step of the journey was delivered around June 2020 and consisted on the deployment to production of a cohort of circa 20 machines learning models scoring the propensity of customers to purchase a particular retail banking product from diverse business areas: mortgage, lending, wealth, business. These models were build and delivered to obtain an accurate prediction of the customers’ propensity to acquire a particular product. Albeit being accurate within the specific application of the model, this output does not offer a customer centric framework in the sense that the transversal comparison between product verticals is not possible unless the predicted propensities are calibrated to represent the true posterior probability of the actual sales that took place. The next stage of the journey takes care of this limitation by implementing a Next Best Action (NBA) engine that calibrates the propensity of each of these models based on the posterior probability observed on past sales providing comparison across products in a customer centric view. This component effectively moves from a product centric approach where accurate leads are generated at each product to a customer centric approach where we are being able to offer the best proposition to the customer at any given context and time. These proposal delivers greatly on the Bank’s vision to become the leading DRB and represents a milestone in the customer experience and engagement that we are able to offer. NBA engine was delivered circa June 2021 and represented the second component of the overall journey.

The third component was delivered to answer a major challenge when dealing with models deployed in a production environment: monitoring and quality control. This step is a fundamental requirement for the Data Science team to satisfy model risk requirements aimed at auditing and ascertaining that the model output is fit for purpose. The Production Model Monitoring (PMM) framework completes a series of automated checks on the model inputs and outputs after each scoring date. The model inputs and outputs are compared against previous scoring dates. If the inputs and outputs have not changed significantly, this suggests that the model is stable and the propensity model output scores are published as normal.

However, if the inputs and/or outputs have shifted significantly, then the Data Science team will receive an alert notification and, in cases, some output will be placed directly on a quarantine. This will allow for investigation of the model – it may need to be retrained, rebuilt, or the features and/or exclusions that define the customer base need to be challenged. In extreme cases, the output scores may not be published. The architecture of the monitoring PMM component currently includes checks on 5 metrics which can easily be expanded if new requirements arise.

The fourth and last component was recently delivered (July 2022) and represents the last step required to offer a business ready view from different propensity models. The DUT articulates the choice between all available output including different scoring dates and circa 20 different models (some of which offer output for the same product) and creates a single view per product or application where the latest available scoring date that passed all control checks is available and ready to go. This step removes the ambiguity of having many different dates and models for the same product and guarantees that the choice of leads for a particular product is unequivocally the best and more recent. By referencing to a model reference table, the Data Science team also has the ability to crucially influence on the lifecycle of machine learning models by allowing a procedure to automatically decommission, retrain, demote and/or promote models to become the champion model for a particular product.

The DUT Project makes use of state-of-the-art machine learning pipelines built using Spark and stored on a Hadoop cluster. Models are build and deployed on the cluster and scheduled to run bi-monthly offering scores for a customer base particular to each model. The analytical tasks are performed using Spark while the input and output to these Spark applications comes in the form of tables that are read and written directly in the Hadoop cluster. This hybrid approach leverages the benefits of using a powerful and parallelised analytical engine (Spark) that scales and combines it seamlessly with read and write access to a Hadoop cluster through two different SQL engines: one optimized for speed and query statements (Impala) and other optimized for definition and manipulation statements (Hive).

Additional Information:

The Downstream User Table represents the culmination of a two-year journey to achieve the highest maturity possible in the application of machine learning pipelines in a production environment. The system takes care of the whole lifecycle of the models from deployment and calibrationof a new model to monitoring and a final step to produce a ready-to-use single business view that enables a myriad of applications: self-serve for exploratory data analysis or ad-hoc insight derivation, campaign leads for marketing purposes and propensity output for use in engagement engines downstream.

The project is fully automated with minimal human intervention required and features the following points of configuration/edition:

•Model reference table in csv format for inclusion of new model builds, re-trains, re-builds, decommissioning or alteration of the attributes of models.

•Configuration file in json format for NBA calibration engine that enables setting of configuration parameters on the definition of target views and conversion windows for posterior probabilities and types of calibrator to be fitted (two options currently available logistic or isotonic repressors).

•Configuration file in json format for PMM monitoring framework enabling configuration settings on the thresholds for a two level alert system (yellow alert as a warning that requires human supervision and an amber alert for automatic quarantining of output), setting of long and short term dates for monitoring comparison, and setting of specific model features to monitor.

The project was delivered using agile methodology in sequential stages adding functionality and components sequentially over a period of two years. July 2022 marked the milestone of deploying the final capstone component that completes the journey of what we believe exemplifies the highest maturity on a machine learning production environment application.

The uniqueness and value of this project strives in the combination of very distinct components in the architecture that address specific challenges posed both from the customer and the business side. From one side, there is the vision to become best in class in digital customer engagement and this project delivers on this by putting the customer first and ranking retail products around him. From the other side, there is the requirement from the business to monitor, validate and control the lifecycle of models deployed to guarantee they are always relevant and fit for purpose.

One of the differentiating strong points of this system is its versatility and flexibility. The final leads generated are monitored, calibrated and business ready, that means they are ready to go and can be applied in a wide range of applications without further control or processing. Also, through the articulation of several configuration ports in the form of csv and json files, Data Science team has the flexibility to modify configuration parameters and add/remove/make changes on the cohort of machine learning models. With this system in place, the business not only drives the engagement with customers, generating measurable incrementality across different channels, but also keeps a high standard of output quality while adhering to stringent internal and external regulatory requirements.