Kinesense Ltd

Nominated Award: Best Application of AI to achieve Social Good and Best Application of AI in an SME

Website of Company: https://www.kinesense-vca.com/

Company Overview

Kinesense develops video analytics algorithms to enable the automatic searching of video content, mainly serving the law enforcement and security sectors, however, we also regularly lend our expertise to projects such as wildlife monitoring for protecting endangered animals.

Crime-fighting:

CCTV cameras play a key role in deterring and combatting crime in our communities. It is estimated that there is 1 camera for every 13 people in Ireland, or approximately 400,000 in total, with 96% owned by private businesses and homeowners [source: cctv.co.uk survey]. There are an estimated 5,000 different CCTV video formats, many of which are not widely supported by common media players. This means that although a crime may have been captured on video, police officers regularly encounter delays and challenges when trying to open and playback the video file.

Manually reviewing footage is a time-consuming task. While CCTV is crucial for many investigations, the time and effort required to retrieve, view, analyse and report on video footage is a huge drain on police resources. According to

a UK national police user survey, “collecting, converting and sharing digital evidence are top ‘pain points’ for police”. Specialist tools for video ingest and analysis are needed to get insights from video faster, and ultimately, help police to secure more convictions and improve crime solving rates.

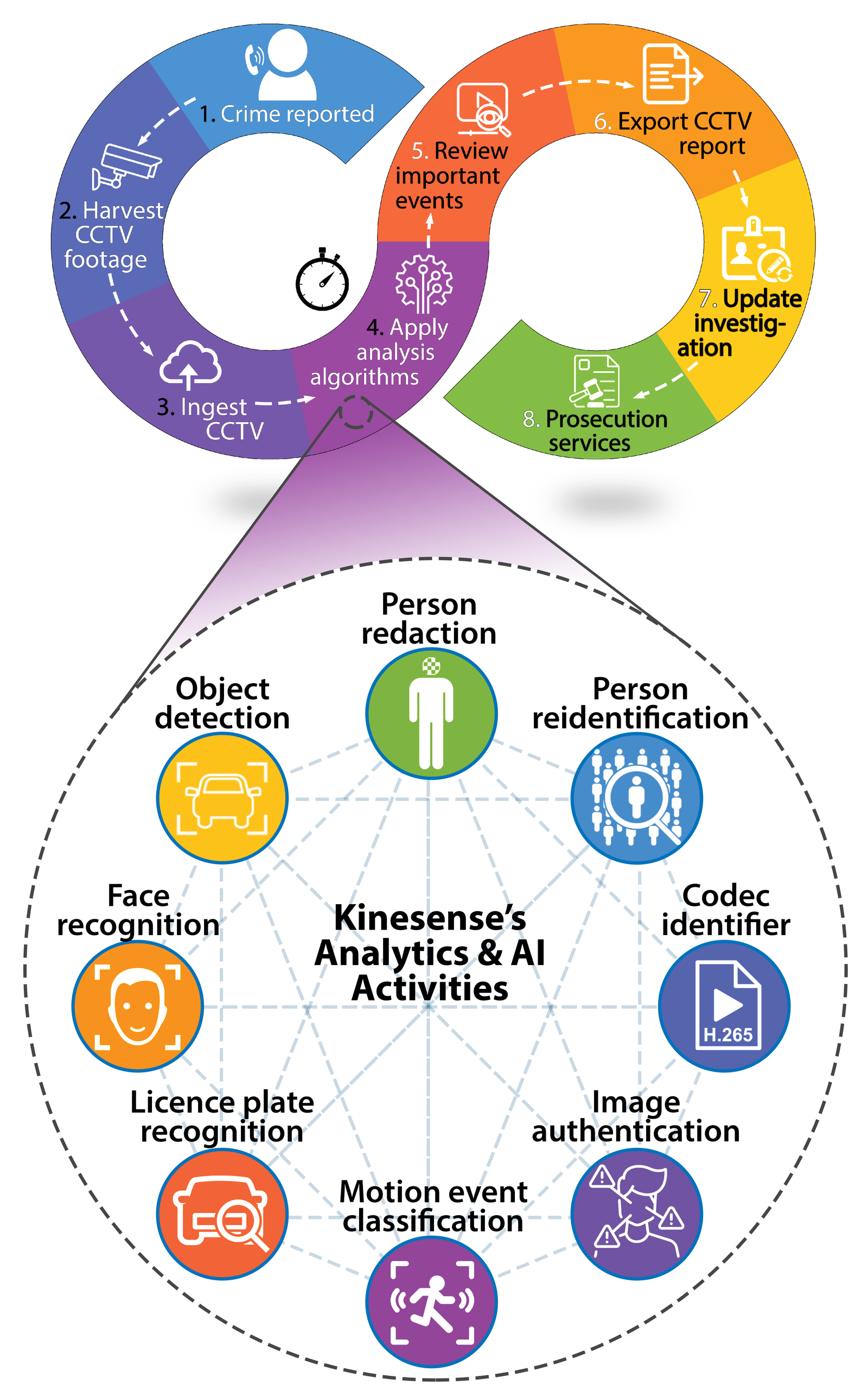

Kinesense provides investigators with an easy-to-use software platform that takes care of everything from ingest right through to creating detailed evidence reports for court (steps 3-6 in the crime-to-court flow diagram in Figure 1), thereby saving valuable police time and eliminating bottlenecks. In

the context of the Criminal Justice market, having the ability to process CCTV footage effortlessly and expeditiously is pivotal, especially at the early stage of investigations where time is often at the essence and investigators frequently rely on CCTV to piece together events and establish new leads.

Wildlife Conservation:

For many of Africa’s endangered and critically threatened species, survival hangs perilously in the balance. It is critical for conservationists/policymakers to have insights surrounding animal population levels and so that effective strategies can be implemented to protect these vulnerable species. Motion-triggered camera traps are an invaluable tool in this regard; however, they generate vast quantities of data. Wading through millions of images from hundreds or thousands of cameras is a highly laborious task for humans. We helped conservationists in Africa to overcome this obstacle by putting together a standalone AI solution which enables them to detect and classify over 50 animals of interest.

Embracing AI:

Over the years, Kinesense has strived to incorporate the latest advances in deep learning technology into our software suite. Furthermore, Kinesense is engaged in an AI-centric research project, namely the Visual Intelligence Search Platform (VISP), which is a collaboration with the Sigmedia Group in Trinity College Dublin and industry-partner, Overcast. The VISP project seeks to improve workflows for transcoding, analysing, and distributing videos using deep learning video analytics and cloud-based processing technologies.

Reason for Nomination

Social benefit:

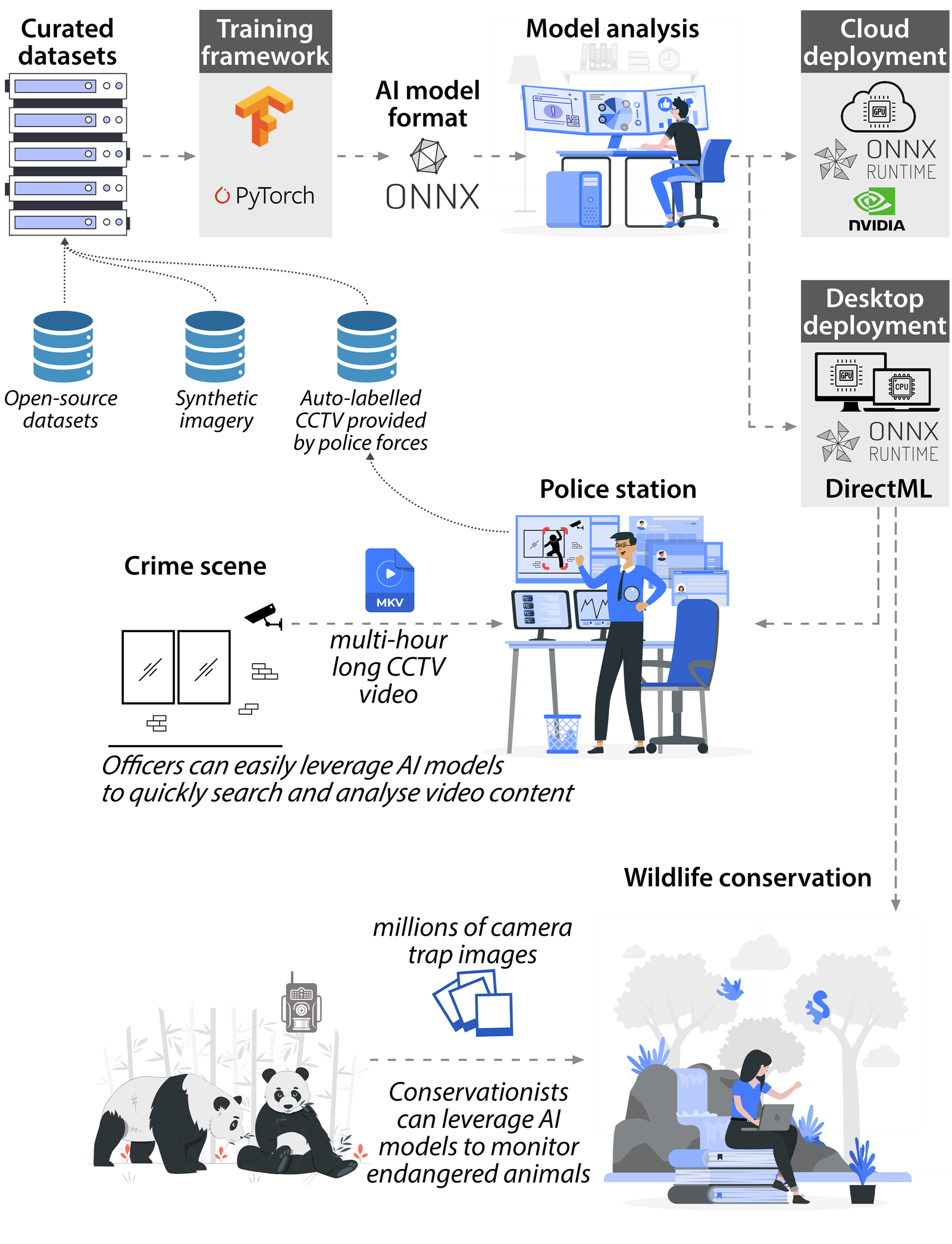

Kinesense allows police to spend less time sifting through videos and more time doing what they are excellent at – safeguarding our communities. At the same time, we help conservationists to efficiently process millions of camera trap images so that they can make informed decisions around intervention strategies, as depicted in Figure 2.

How we leverage AI to achieve Social Good:

Police officers must be able to trust the efficacy of AI models and have confidence that important events are not missed, even when dealing with low-quality CCTV footage. Our carefully curated datasets serve as the foundation for creating powerful AI models.

Motivation for creating our own datasets:

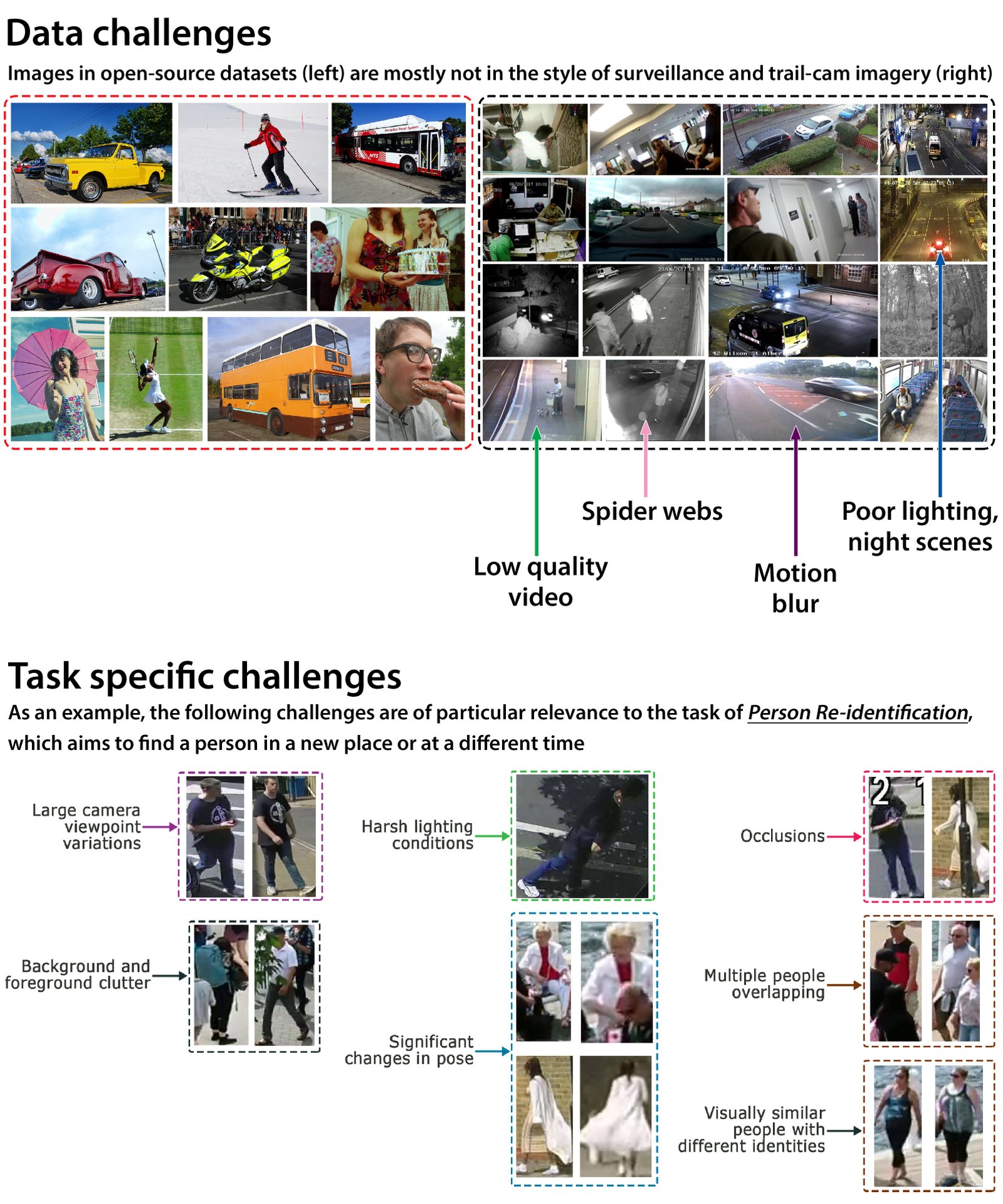

Existing available datasets are mostly populated with photographs capturing everyday scenes and events, such as social gatherings, weddings, sports events etc., as well as photos from commercial product shoots. The subjects in these images are typically well-framed, and people in these images are usually aware that they are being photographed and therefore tend to be posing and looking directly at the camera. Moreover, the scenes are usually sufficiently illuminated, and the image quality is good.

CCTV surveillance style footage, on the other hand, is often characterised by being low-quality (especially for grainy, poorly illuminated night-time scenes), and often contains textual artefacts such as time and date stamps. Furthermore, human subjects rarely look directly at the camera, and they are often at the periphery of the image or only partially in-frame. CCTV cameras are mostly positioned at elevated vantage points and thus they see a top-down view of subjects, which is seldom seen in non-surveillance style footage. Finally, a significant proportion of the surveillance style footage is captured at night-time when the camera’s near-Infrared (NIR) mode is active. Once again, there is an acute shortage of NIR images in the non-surveillance style datasets. The stark difference between non-surveillance style imagery and the type of imagery we typically deal with (CCTV, dashcam, bodycam, trail cam etc) is conveyed in Figure 3.

We built out our datasets in several innovative ways by using:

1. An auto-labelling strategy: Kinesense has access to a vast collection of CCTV footage that has been made available by several police forces for R&D purposes. This type of footage is exactly the kind of footage that we would expect to encounter in the wild. Additionally, the data is diverse – it contains hundreds of unique scenes captured under a wide range of illumination and weather conditions. However, the data is unlabelled. In order to harness the potential of this particularly valuable data source, we have used an auto-labelling strategy (supported by active learning) that leverages a pre-trained model to generate annotation information and only requires minimal human intervention (Figure 4a).

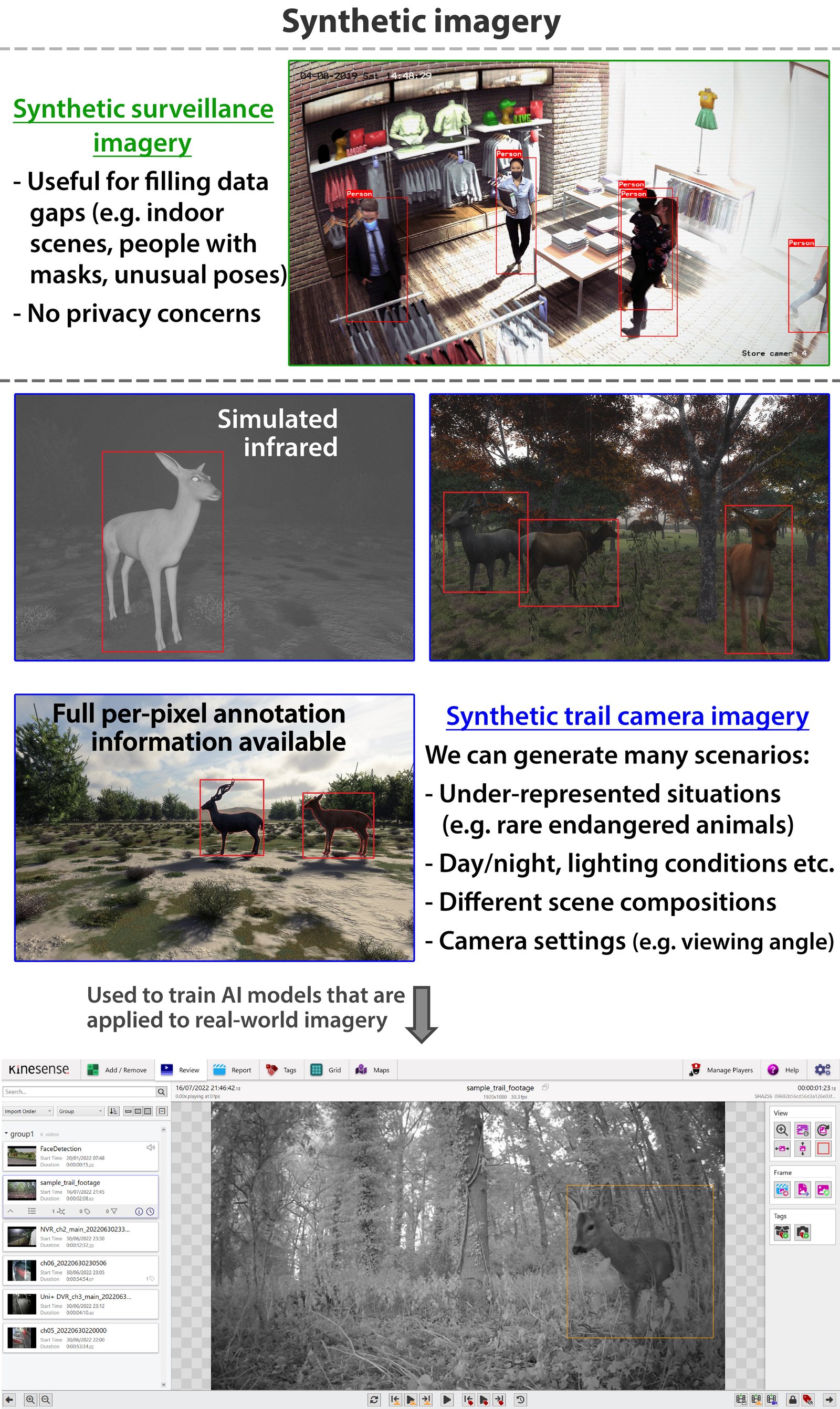

2. A synthetic data generation framework for bridging data gaps (i.e., when there was a lack of real-world data). This included cases such as interior scenes, people with face masks, and people in unusual poses (Figure 4b).

3. A custom augmentation pipeline that transfers the style of CCTV imagery to non-surveillance style images, which included adding video compression artefacts, simulating near-Infrared effect, overlaying timestamp information, warping images to mimic wide-angle lenses etc (Figure 4c).

Our datasets contain rich, relevant, and diverse training data representative of surveillance footage that police deal with daily, and consequently, our AI models demonstrate a marked improvement versus off-the-shelf models.

Optimising inference latency is important as police officers are often under time pressure. By switching our AI models to ONNX (Open Neural Network eXchange) format, we achieved significant speed-ups (up to 5x faster). Moreover, ONNX models can leverage GPU acceleration by exploiting DirectML (which is supported out-of-the-box by almost all modern GPUs) without having to configure cumbersome NVIDIA CUDA drivers and tools.

Many of these developments that we made with the surveillance use-case in mind are readily transferrable to the wildlife conservation use-case (e.g., simulating near-Infrared (nIR) as an augmentation as there is a profound lack of nIR training data available for certain species).

Additional Information:

Kinesense allows police to spend less time sifting through videos and more time doing what they are excellent at – safeguarding our communities. At the same time, we help conservationists to efficiently process millions of camera trap images so that they can make informed decisions around intervention

strategies.

Evidence of impact:

The massive time savings mean that police can afford to devote more attention to tackling high-volume crime such as anti-social behaviour, theft, vandalism, illegal fly-tipping etc., which can invariably be de-prioritised in over-stretched units.

Kinesense software leads to faster charging decisions. As a concrete example, Northumbria Police say they were able to give frontline officers 24-hour access to video tools and reduce the turnaround of CCTV submissions from three months to the same day. Something as simple as a shoplifter arrested in the evening or weekend could face difficulties if the CCTV format was incompatible, so the investigation would halt until a CCTV specialist came on duty and often the suspect would have to be bailed. Innocent suspects, especially, stand to benefit from speedier resolutions.

Kinesense has helped to make a positive real-world impact in several ways.Here are four such examples:

1. In the first six months of 2021, a UK police force used our technology to sift through 150,000 hours of video to help crack a high-profile homicide case. Our algorithms enabled them save 98% of the time compared to manual review.

2. A UK police force’s Major Investigation Team obtained 643 hours of CCTV video in relation to a serious crime. Using Kinesense software to filter certain events, the force only needed to watch just 70 hours of it, resulting in a saving of 573 hours of actual viewing time.

3. In another high-profile murder case, Greater Manchester Police used Kinesense to process 1,660 CCTV clips when investigating the murder of Sian Roberts. In 2017, Kinesense was used in court as Glynn Williams was convicted of her murder and sentenced to 21 years in prison.

4. As part of the biggest investigation that Kent Police has ever embarked on, which involved seizing in excess of 155,000 hours (over 18 years) of footage from multiple sources (80TB worth of material), Kinesense was credited with enabling a single dedicated investigator to expeditiously go through all of this material and accurately report on evidence of offending.

Mission statement

At Kinesense, we place our shared ethical values at the heart of everything we do. While advances in AI have raised questions around privacy and ethics, we believe that it is critical to our collective long-term success to demonstrate an unwavering commitment to high ethical standards. Kinesense does not develop software that can be used for privacy invasive activities or encroaching on civil liberties. Instead, our software is only available to reputable agencies and police forces for analysing video content after a crime has been committed and where there is a need to find evidence and leads for an investigation.

Kinesense project 2021

Nominated Company: Kinesense

Nominated Award: Best Use of AI in Sector

Kinesense develops video analytics algorithms to enable the automatic searching of video content, mainly serving the law enforcement and security sectors.

Background:

Back in 2009, Kinesense set out to tackle a longstanding problem faced by police forces and investigators: how to find critical evidence hidden in thousands of hours of video. The time and effort required to retrieve, view, analyse and report on video footage as evidence is a huge drain on resources. There are an estimated 5,000 different CCTV video formats, many of which are not widely supported by common media players. This means that although a crime may have been captured on video, officers are often unable to readily view the video.

An additional problem is the time is takes to manually watch video. Typically, it takes between 2-2.5 hours to carefully sift through one hour of video and gain insights. The burden of watching videos in the Criminal Justice system costs the average police force hundreds of thousands of hours per year (a small police force will process over a million hours of video a year).

In the context of the Criminal Justice market, having the ability to process CCTV footage effortlessly and expeditiously is pivotal, especially at the early stage of investigations where time is often at the essence and investigators frequently rely on CCTV to piece together events and establish new leads.

Specialist tools for video ingest and analysis are needed to get actionable intelligence from video faster, and ultimately, help officers to secure more convictions and improve overall crime solving rates. Kinesense is a leading provider of such software tools to police forces throughout Europe and further afield.

Embracing AI:

Over the years, Kinesense has strived to incorporate the latest advances in deep learning technology. Currently, deep learning analytics are used for tasks such as object detection, tracking and event classification. Additionally, there are several deep learning techniques under active development, and which are planned to be included in upcoming releases.

Furthermore, Kinesense is engaged in an AI-centric research project, namely the Visual Intelligence Search Platform (VISP), which is a collaboration with the Sigmedia Group in Trinity College Dublin and industry-partner, Overcast. The VISP project seeks to improve workflows for transcoding, analysing, and distributing videos using deep learning video analytics and cloud-based processing technologies. Much of our AI activities are being carried out in collaboration with our consortium partners.

Mission statement:

At Kinesense, we place our shared ethical values at the heart of everything we do. While advances in AI have raised questions around privacy and ethics, we believe that it is critical to our collective long-term success to demonstrate an unwavering commitment to high ethical standards. Kinesense does not develop software that can be used for privacy invasive activities or encroaching on civil liberties. Instead, our software is only available to reputable agencies and police forces for analysing video content after a crime has been committed and where there is a need to find evidence and leads for an investigation.

Reason for Nomination:

Problem to solve and societal/economic impact:

In 2018, over 80 million CCTV cameras were shipped globally, with this expected to double by 2022. 88% of cameras record nonstop, 24 hours a day. Video data is exploding. In 2018 alone, the cameras shipped had enough storage capacity for 37 billion hours of video. Video provides security agencies and police forces with a rich visual resource; however, the challenge is making optimum use of it. Whilst police forces recognize the value that CCTV video brings to investigations, many police forces are already over-stretched and cannot devote personnel to watch long video clips.

Kinesense tackles this problem by leveraging a range of deep learning techniques for performing video content search and analysis, thereby dramatically reducing the time that personnel need to spend going through videos. One example where Kinesense helped officers occurred during the first six months of 2021, when a UK police force used our technology to sift through 150,000 hours of video to help crack a serious homicide case. The algorithm enabled them save 98% of the time compared to manual review.

Police forces must be able to trust the efficacy of the deep learning methods and ensure important events are not missed, even when contending with complex scenes. As such, there is a strong emphasis on developing accurate deep learning networks that can reliably identify objects or behaviours. It is also important that the algorithms can run within a reasonable timeframe as many of our end users have hardware constraints (i.e., no access to GPUs), and must rely on slower CPUs.

Specifically, the types of computer vision problems that we are trying to solve using deep learning methods include:

• Object detection and tracking

• Classification of motion events

• Attribute-based object retrieval and search (i.e., find an object in a video based on a textual description as input, such as finding a man wearing a blue top)

• Person reidentification across multiple videos

• Individual person segmentation for automated redaction

High-quality training data is a key requirement for these tasks. While there are some useful open-source datasets (especially for tasks such as detecting people, where there is an abundance of training data), it was necessary to curate our own datasets so that we could achieve the highest accuracy levels and so that we could tailor our algorithms to meet the precise needs of police forces. There are several challenges related to dataset curation and we had to resort to innovative strategies to ensure our datasets contained rich, relevant, and diverse training samples.

Followed Approach:

We generated annotated data in three different ways:

1. Auto-labelling: Kinesense has access to a vast collection of CCTV footage that has been made available by several police forces for R&D purposes. This type of footage is exactly the kind of footage that we would expect to encounter in the wild. Additionally, the data is diverse – it contains hundreds of unique scenes captured under a wide range of illumination and weather conditions. However, the data is unlabelled. In order to harness the potential of this particularly valuable data source, we have used an auto-labelling strategy (supported by active learning) that leverages a pre-trained model to generate annotation information and only requires minimal human intervention.

2. Synthetic data: a semi-automated synthetic data generation pipeline that positions 3D models of target objects and generates an arbitrary number of rendered images along with the associated ground truth annotations. The synthetic data is used to bridge data gaps (e.g. adding more people wearing face coverings) and cover edge cases such as people in unusual poses (e.g. bending down, carrying a child).

3. Style transfer as augmentation – applying CCTV style to non-surveillance style imagery: performing a range of image operations such as adding video compression artefacts, converting colour imagery to simulated near-Infrared, overlaying timestamp information, warping images to mimic wide-angle lenses. Models trained with our curated dataset demonstrated a marked improvement over baseline models, particularly in challenging scenes.

Novel Automatic Codec Identification Tool:

There estimated 5,000 different CCTV video formats, many of which are obscure and cannot be opened by common media players. Kinesense, along with researchers in the Sigmedia Group in Trinity College Dublin, have developed a tool for automatically identifying video codecs so that unrecognised videos can be opened and played back. This tool is based on a convolutional neural network (CNN) model trained on the Kinesense video format dataset to identify unknown proprietary video codecs. Results show 97% accuracy at identify the correct codec.

Additional Information:

Motivation for building out our dataset generation capabilities:

Existing available datasets are mostly populated with photographs capturing everyday scenes and events, such as social gatherings, weddings, sports events etc., as well as photos from commercial product shoots. The subjects in these images are typically well-framed, and people in these images are usually aware that they are being photographed and therefore tend to be posing and looking directly at the camera. Moreover, the scenes are usually sufficiently illuminated and the image quality is good.

CCTV surveillance style footage, on the other hand, is often characterised by being low-quality, low-resolution, and low-contrast (especially for poorly illuminated night time scenes), and often contains textual artefacts such as time and date stamps. Furthermore, human subjects rarely look directly at the camera, and they are often at the periphery of the image or only partially in-frame. CCTV cameras are mostly positioned at elevated vantage points and thus they see a top-down view of subjects, which is seldom seen in non-surveillance style footage. Finally, a significant proportion of the surveillance style footage is captured at night-time when the camera’s near-Infrared (NIR) mode is active. Once again, there is an acute shortage of NIR images in the non-surveillance style datasets.

Finally, existing datasets are not perfectly aligned with our objectives. For example, the MS COCO dataset for object detection contains 80 distinct categories, including vehicles such as Cars and Trucks, however, police forces want to also identify other vehicle types such as Vans which are not separately labelled in MS COCO. With this in mind, deep learning methods trained on existing, non-surveillance style datasets would likely struggle to perform with credibility when applied to surveillance style footage

Difficulties encountered:

One issue that arose as part of the auto-labelling process concerned how certain vehicles should be categorised. It was discovered that many vehicles are ambiguous. For example, there were questions around how the categorise vehicles such as MPVs, hybrid cars, minivans – should they be considered cars or vans? Similarly, it was often unclear if vehicles such as campervans, delivery vans and ambulances should be labelled as vans or trucks.

This problem was remedied by spelling out clear definitions for each object type. Additionally, we realised that police forces would also likely encounter this issue so we designed our algorithms to output confidence scores indicating probability of belonging to various classes, so even if an officer wanted to search for a van, for example, results for other vehicle types would be displayed too as long as the probability of being a van was above a certain threshold.

Dissemination of deep learning work:

Work on the Automatic Codec Identification tool is due be presented at ICIP2021 in Alaska ( 28th IEEE International Conference on Image Processing) Title: “CNN-Based Video Codec Classifier for multimedia forensics”. Authors: Rodrigo Pessoa, Anil Kokaram, Francois Pitie (Sigmedia Group, Electronic and Electrical Engineering Dept TCD) and Mark Sugrue (Kinesense).